Jun 4, 2019

Georgia Tech researchers have created machine learning (ML) algorithms to ensure grouped data is fairly represented.

This is the first example of incorporating fairness into the popular spectral clustering technique for partitioning graph data, according to researchers. When evaluated on social networks like Facebook, their algorithms improve the groups diversity by 10 to 34 percent on average.

Promoting fairness

ML can automate complex social and financial processes, like lending, education, and marketing. Yet for all its innovation, potential for bias arises as many datasets have disproportionate examples of one demographic.

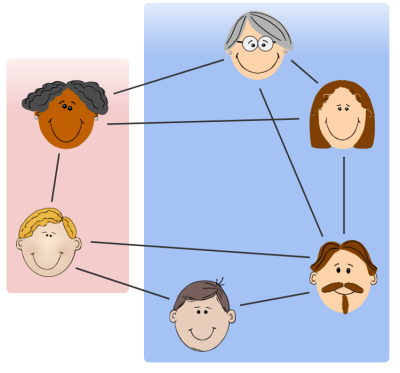

The challenge of keeping ML fair becomes only more complicated with grouped data, or clusters. Social networks, for example, rely on large graphs of data that connect various people to each other. Enough of these connections can indicate a community — valuable data for advertisers and other stakeholders.

Spectral clustering is a common ML technique to help find these communities. With the new emphasis on fairness in ML, though, ensuring these communities are diverse is becoming more important.

“Obviously, you want to figure out who the communities are, but you also want them to be diverse,” said School of Computer Science (SCS) Ph.D. student Samira Samadi.

Keeping the proportions

Samadi and her team characterize diversity as each demographic group having proportional representation in the clusters with the same proportions as in the entire dataset. To do this, they designed clustering algorithms that find fairer clustering when available in the data.

The researchers tested their algorithms on a natural variant of the stochastic block model, a famous random graph model used to study the performance of clustering algorithms. On this model, they proved the efficacy of their algorithms to recover the fairer clustering in the data with high probability.

Yet the algorithms are not just theoretical. The researchers also tested them on empirical datasets and proved that the algorithms can lead to more proportional clusters with minimal damage to the interconnectivity of the groups, or the quality of clusters.

“Designing fair clustering algorithms helps ML to draw a more diverse image of communities in a network,” Samadi said. “This not only leads to less representational bias toward specific demographics, but could also help marketers to maximize their full potential customer base.”

The researchers presented their work in the paper, Guarantees for Spectral Clustering with Fairness Constraints, at the International Conference on Machine Learning (ICML) in Long Beach, California, from June 9 to 15. Samadi co-wrote the paper with SCS Assistant Professor Jamie Morgenstern, Rutgers postdoctoral researcher Matthäus Kleindessner, and Rutgers Assistant Professor Pranjal Awasthi.