Apr 17, 2019

For several years, scientists have been training intelligent agents on images and other data so that machines can learn to recognize what they see. Researchers are now starting to work toward training robots equipped with these stores of data to be able to make better autonomous decisions.

Zsolt Kira, associate director of the Machine Learning Center at Georgia Tech, and Georgia Tech Ph.D. student Chih-Yao Ma have published new research that improves on how autonomous robots move in their surroundings. This work is in collaboration with Caiming Xiong, director of Salesforce Research, and researchers from the University of Maryland, College Park. Georgia Tech professor Ghassan AlRegib and Ph.D. student Jiasen Lu are also paper authors.

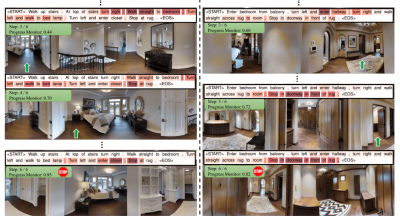

Existing methods have allowed robots to navigate unknown environments by combining a 360- degree panoramic view of their surroundings and programmed instructions that describe how to accomplish a goal. The goal could be to locate a doctor’s office in an office complex or find the fastest exit route in a building.

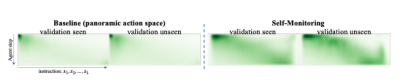

The new Georgia Tech research by Kira and his team improves the accuracy with which a robot completes an assigned navigation task by 8 percent, a significant increase for autonomous navigation systems. The research method includes new mechanisms that add reasoning skills to autonomous systems, as well as the ability for them to essentially correct their mistakes.

“By teaching robots to more effectively navigate unknown environments, robots could be used in the household or for autonomous vehicles,” said Kira.

The researchers say their method’s improved accuracy for navigation could be particularly helpful in the future for scenarios that might be too dangerous for humans, such as a robot performing search and rescue or entering a burning building. Or robots could simply take up more mundane (but essential) tasks like making and serving morning coffee.

Kira’s team began with an existing robotics technique, the attention mechanism. The mechanism teaches robots to autonomously move in their environment using written instructions. The mechanism also helps it identify which step should be completed next.

Kira and Ma added a reasoning component to allow the robot to estimate how well it was doing in completing the task, and how close it was to finishing it. They also added a new “rollback” function. Rollback uses a neural network trained to help the agent determine if it has made a mistake while following instructions. If it determines a mistake has been made, the agent reverts to its most recent successfully completed task in an effort to correct the error. This improvement significantly reduces the number of steps needed to reach the goal.

“These added components helped increase the accuracy of the attention mechanism and led to higher success rates in performance or completing the set of instructions,” said Kira.

The work is published in two papers, “Self-Monitoring Navigation Agent Via Auxillary Progress Estimation” and “The Regretful Agent: Heuristic-Aided Navigation through Progress Estimation.” The papers will be presented respectively at the International Conference on Learning Representations (ICLR) May 6-9 and the Computer Vision and Pattern Recognition (CVPR) conference June 16-20.